The history of computing is not merely a chronological list of dates; it is an evolution of logic gate density, material science, and instruction set architectures. Computer generations are classified not by the calendar, but by the fundamental hardware technology used to perform logic operations. From the thermal inefficiency of vacuum tubes to the probabilistic nature of quantum qubits, each generation represents a paradigm shift in how humanity processes information.

This guide provides a deep technical analysis of the five established generations and the emerging sixth era, utilizing architectural data to visualize the transition from serial processing to parallel cognitive computing.

Introduction to Computer Generations

Computer generations are distinct stages in the development of electronic computing technology, categorized by the primary component used for logic processing and memory storage.

Historically, the transition between these generations was marked by a fundamental change in hardware switching from vacuum tubes to transistors, then to integrated chips. Today, the lines are blurring as we move from hardware-defined generations (VLSI) to capability-defined generations (Artificial Intelligence and Quantum mechanics).

- Key Insight: Generations often overlap. The “First Generation” didn’t disappear overnight in 1956; military legacy systems used vacuum tubes well into the 1960s, while research labs were already prototyping integrated circuits.

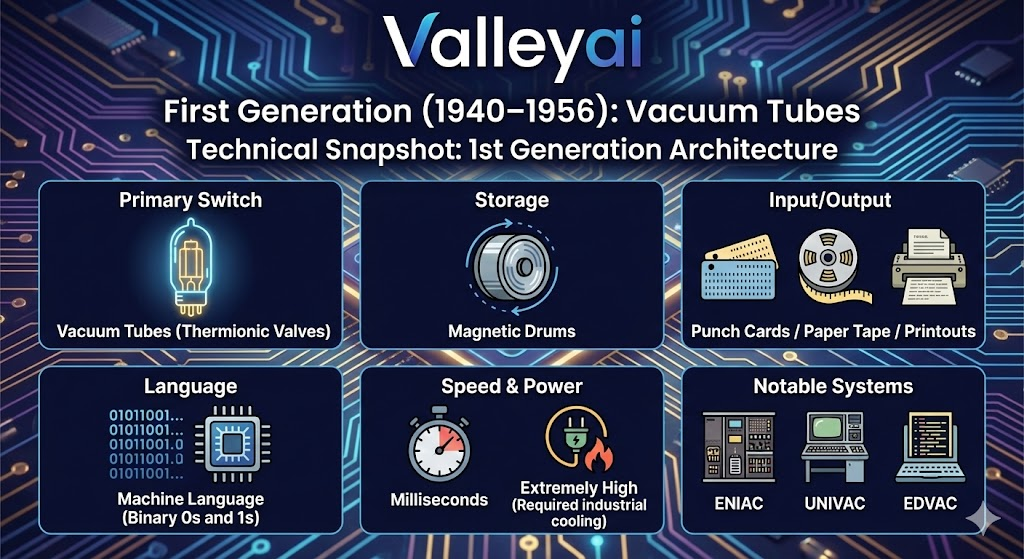

First Generation (1940–1956): Vacuum Tubes

Technical Snapshot: 1st Generation Architecture

| Feature | Specification |

|---|---|

| Primary Switch | Vacuum Tubes (Thermionic Valves) |

| Storage | Magnetic Drums |

| Input/Output | Punch Cards / Paper Tape / Printouts |

| Language | Machine Language (Binary 0s and 1s) |

| Speed | Milliseconds |

| Power/Heat | Extremely High (Required industrial cooling) |

| Notable Systems | ENIAC, UNIVAC, EDVAC |

The first generation of computers relied on vacuum tubes for circuitry and magnetic drums for memory, characterized by immense physical size and reliance on machine language for operation. These systems were strictly calculating devices designed for ballistics and scientific computation, lacking the operating systems or multitasking capabilities of modern hardware.

Architectural Analysis: The Thermal Bottleneck

The defining engineering constraint of the first generation was heat. Vacuum tubes function via thermionic emission heating a filament to release electrons. This meant systems like the ENIAC (Electronic Numerical Integrator and Computer) contained 18,000 tubes and consumed 150 kilowatts of electricity. When a tube blew (which happened frequently), the system halted.

From a logic perspective, these machines were rigid. Programming was not typing code; it was manual rewiring. It wasn’t until the implementation of John von Neumann’s architecture which introduced the concept of stored programs in memory that computers became versatile tools rather than static calculators.

Which technology was used in the first generation of computers?

The primary technology was vacuum tubes. These fragile glass bulbs acted as electronic switches to control the flow of electricity, enabling binary logic gates.

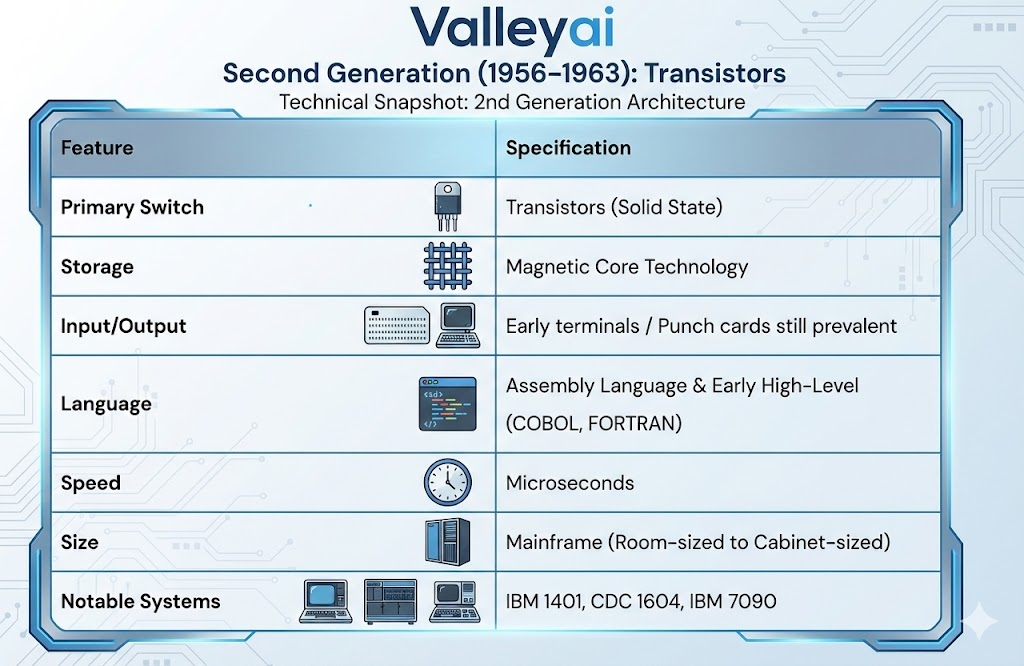

Second Generation (1956–1963): Transistors

Technical Snapshot: 2nd Generation Architecture

| Feature | Specification |

|---|---|

| Primary Switch | Transistors (Solid State) |

| Storage | Magnetic Core Technology |

| Input/Output | Early terminals / Punch cards still prevalent |

| Language | Assembly Language & Early High-Level (COBOL, FORTRAN) |

| Speed | Microseconds |

| Size | Mainframe (Room-sized to Cabinet-sized) |

| Notable Systems | IBM 1401, CDC 1604, IBM 7090 |

The second generation of computers was defined by the replacement of vacuum tubes with transistors, allowing computers to become smaller, faster, cheaper, and more energy-efficient. This era introduced the concept of software distinct from hardware, moving from binary machine code to symbolic Assembly and early high-level languages.

Architectural Analysis: The Solid-State Revolution

The invention of the transistor at Bell Labs was the turning point for computing. Unlike tubes, transistors required no heater filament, drastically reducing power consumption and failure rates. This reliability allowed for more complex architectures.

This generation also saw the shift from magnetic drums to Magnetic Core Memory. This was the first non-volatile random-access memory (RAM), consisting of tiny ferrite rings strung on wire grids.

The Software Shift:

Crucially, this generation introduced Assembly Language. Instead of remembering binary opcodes (e.g., 101100), programmers could use mnemonics (e.g., ADD, MOV). This abstraction layer led to the creation of COBOL and FORTRAN, allowing code to be written for the logic of the problem rather than the geometry of the hardware.

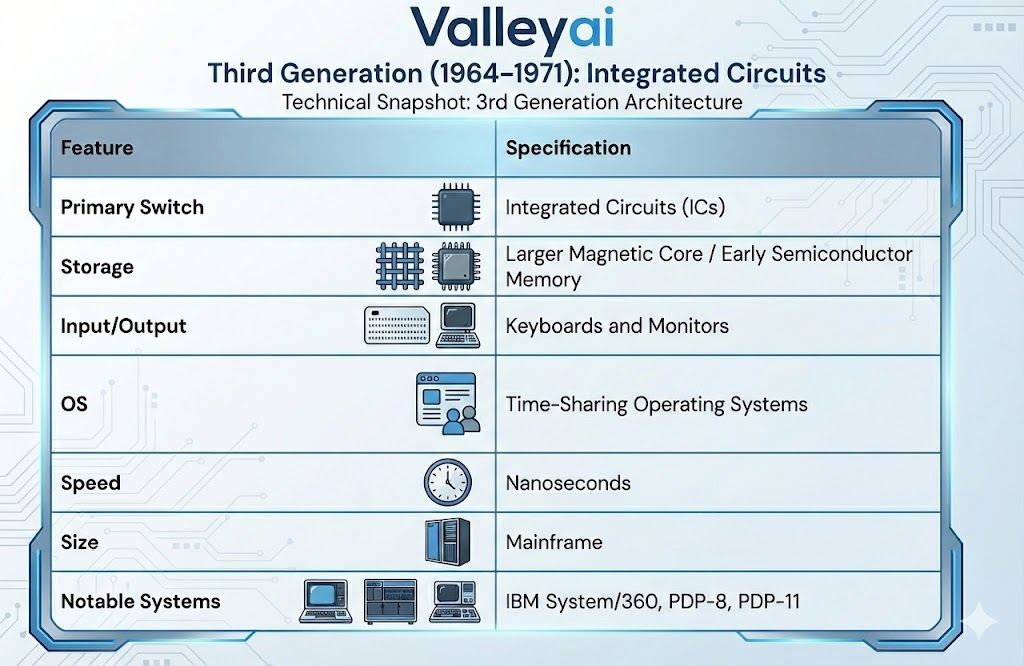

Third Generation (1964–1971): Integrated Circuits

Technical Snapshot: 3rd Generation Architecture

| Feature | Specification |

|---|---|

| Primary Switch | Integrated Circuits (ICs) |

| Storage | Larger Magnetic Core / Early Semiconductor Memory |

| Input/Output | Keyboards and Monitors |

| OS | Time-Sharing Operating Systems |

| Speed | Nanoseconds |

| Notable Systems | IBM System/360, PDP-8, PDP-11 |

The third generation of computers emerged with the development of the Integrated Circuit (IC), which placed multiple transistors onto a single silicon chip. This miniaturization allowed for the birth of the operating system, enabling machines to run multiple applications simultaneously (time-sharing) and interacting via keyboards and monitors rather than punch cards.

My Architectural Analysis: Standardization and Scale

Before 1964, computers were custom-built entities. If a company upgraded its computer, it had to rewrite all its software. The IBM System/360 changed this by introducing a compatible family of computers. Software written for a small Model 30 could run on a large Model 75.

The Silicon Shift:

Jack Kilby and Robert Noyce’s invention of the IC meant that logic gates didn’t need to be soldered individually. They could be photolithographically printed. This drastically increased processing speed into the nanosecond range and reduced costs enough to introduce “minicomputers” into medium-sized businesses.

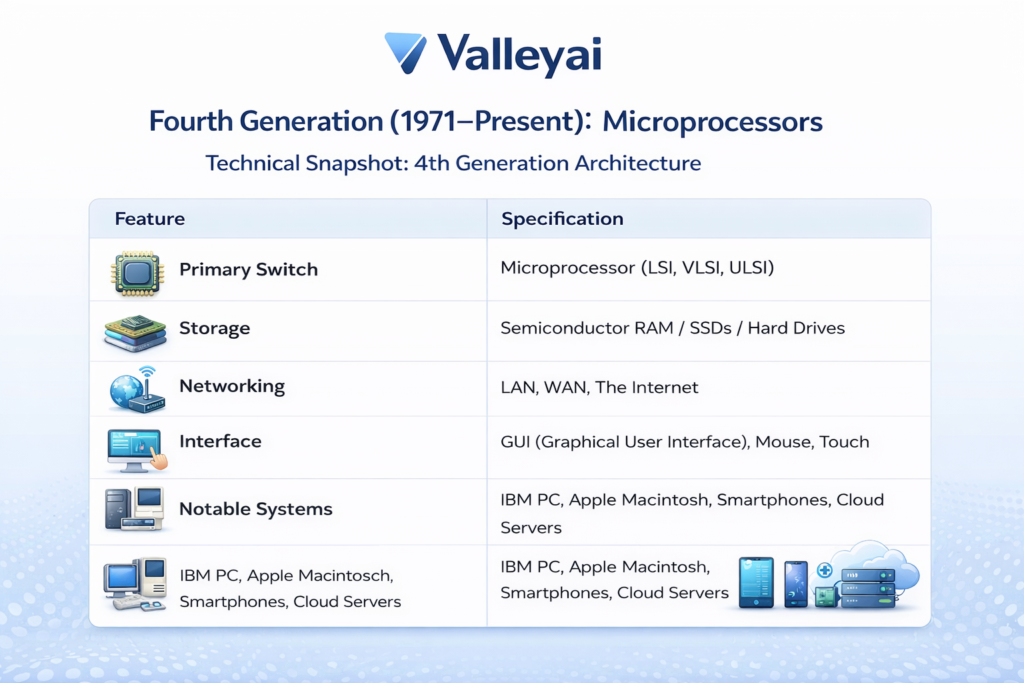

Fourth Generation (1971–Present): Microprocessors

Technical Details: 4th Generation Architecture

| Feature | Specification |

|---|---|

| Primary Switch | Microprocessor (LSI, VLSI, ULSI) |

| Storage | Semiconductor RAM / SSDs / Hard Drives |

| Networking | LAN, WAN, The Internet |

| Interface | GUI (Graphical User Interface), Mouse, Touch |

| Notable Systems | IBM PC, Apple Macintosh, Smartphones, Cloud Servers |

Fourth-generation computers are characterized by the microprocessor, which consolidates the entire Central Processing Unit (CPU) onto a single silicon chip using Very Large Scale Integration (VLSI). This generation triggered the personal computer revolution, the internet, and mobile computing, bringing computation to the mass market.

Expert Architectural Analysis: Density and VLSI

The Intel 4004 (1971) held 2,300 transistors. Modern processors (Ultra Large Scale Integration – ULSI) hold billions. The defining characteristic of this generation is the Von Neumann bottleneck: despite massive speed increases, the architecture still relies on fetching data from memory, processing it, and writing it back.

While we have remained in the “Microprocessor” era for 50 years, the generation is split into distinct phases:

- Personal Computing: Bringing the CPU to the desktop (1980s).

- Networked Computing: Connecting CPUs via the web (1990s).

- Mobile/Cloud Computing: Decoupling the interface from the processing power (2000s–2010s).

What is the difference between 4th and 5th generation computers?

The main difference is the processing model. 4th Generation computers (current PCs/phones) use serial processing via microprocessors to execute specific instructions. 5th Generation computers utilize parallel processing and AI algorithms to solve unstructured problems, learn from data, and understand natural language.

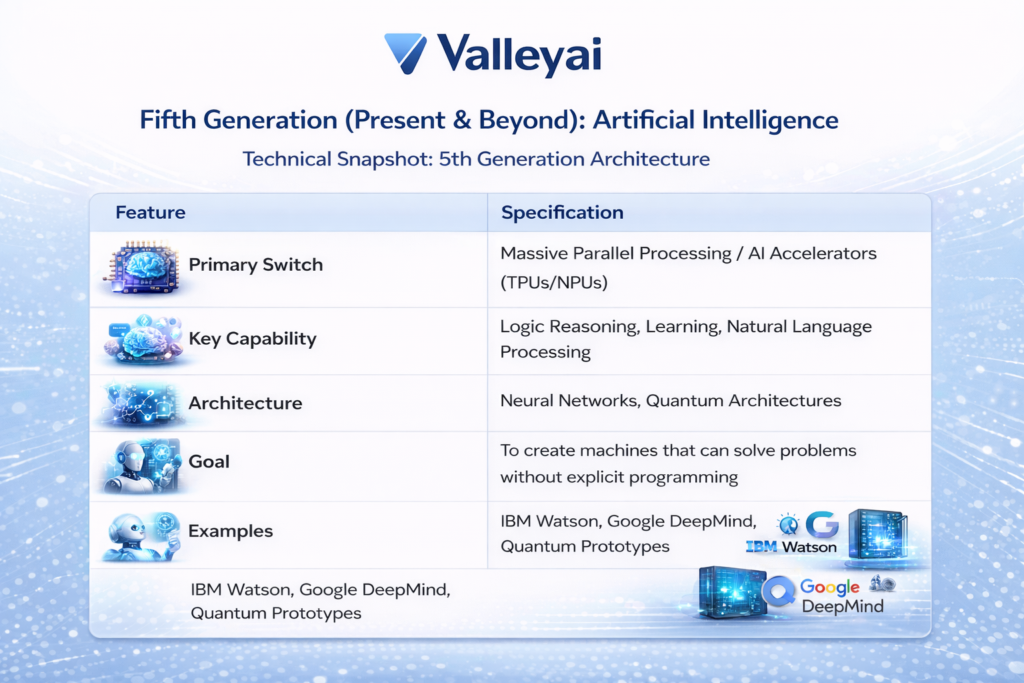

Fifth Generation (Present & Beyond): Artificial Intelligence

Technical Detail: 5th Generation Architecture

| Feature | Specification |

|---|---|

| Primary Switch | Massive Parallel Processing / AI Accelerators (TPUs/NPUs) |

| Key Capability | Logic Reasoning, Learning, Natural Language Processing |

| Architecture | Neural Networks, Quantum Architectures |

| Goal | To create machines that can solve problems without explicit programming |

| Examples | IBM Watson, Google DeepMind, Quantum Prototypes |

Fifth-generation computing is defined by the application of Artificial Intelligence (AI) and parallel processing hardware to enable machines to simulate human reasoning. Unlike previous generations focused on hardware miniaturization, this generation focuses on capability: voice recognition, decision-making, and self-learning algorithms.

Architectural Analysis: Beyond Binary

While we often run AI on 4th gen hardware (CPUs/GPUs), true 5th generation architecture changes the hardware itself. We are seeing the rise of NPUs (Neural Processing Units) designed specifically for tensor operations used in Deep Learning.

Is there a 6th Generation? The Quantum Leap

Many computer scientists argue that Quantum Computing represents a distinct 6th Generation.

- Classical Computers (1st-5th Gen): Use bits (0 or 1).

- Quantum Computers (6th Gen): Use Qubits, which can exist in a state of superposition (representing 0 and 1 simultaneously).

Is there a 6th generation of computer?

While not officially standardized, Quantum Computing and Nanocomputers are widely considered the emerging 6th generation. These systems utilize quantum mechanics to solve calculations that are impossible for traditional silicon-based microprocessors, such as complex molecular modeling and advanced cryptography.

Summary Table of Computer Generations

For a quick comparison of the technological evolution, refer to the table below. Note the trajectory from hardware size to processing logic.

| Generation | Period (Approx) | Core Technology | Speed Metric | Language Type |

|---|---|---|---|---|

| 1st Gen | 1940–1956 | Vacuum Tubes | Milliseconds | Machine (Binary) |

| 2nd Gen | 1956–1963 | Transistors | Microseconds | Assembly / COBOL |

| 3rd Gen | 1964–1971 | Integrated Circuits (IC) | Nanoseconds | High-Level (C, Pascal) |

| 4th Gen | 1971–Present | Microprocessors (VLSI) | Picoseconds | SQL / C++ / Python |

| 5th Gen | Present–Future | AI / Parallel Processing | FLOPS (Floating Point Ops) | Natural Language |

How Have Computer Generations Overlapped?

History is rarely clean. Transistors were invented in 1947, yet vacuum tube computers were built until the mid-50s. Similarly, while we are entering the AI (5th) era, the backbone of our infrastructure relies on 4th generation microprocessors. The transition is currently visible in hybrid chips processors that contain traditional CPU cores (4th Gen) alongside dedicated AI cores (5th Gen) on the same die.

Frequently Asked Questions

What are the 5 generations of computers with their periods?

First (1940-1956): Vacuum Tubes.

Second (1956-1963): Transistors.

Third (1964-1971): Integrated Circuits.

Fourth (1971-Present): Microprocessors.

Fifth (Present-Future): Artificial Intelligence & Quantum.

Why is the timeline for the 4th generation so long?

The 4th generation is defined by the microprocessor. While speed and density (Moore’s Law) have increased exponentially, the fundamental architecture (CPU on a chip) has remained the standard since 1971. We are only now transitioning out of it as we hit the physical limits of silicon and require quantum or neural architectures for further growth.

Kaleem

My name is Kaleem and i am a computer science graduate with 5+ years of experience in Computer science, AI, tech, and web innovation. I founded ValleyAI.net to simplify AI, internet, and computer topics also focus on building useful utility tools. My clear, hands-on content is trusted by 5K+ monthly readers worldwide.