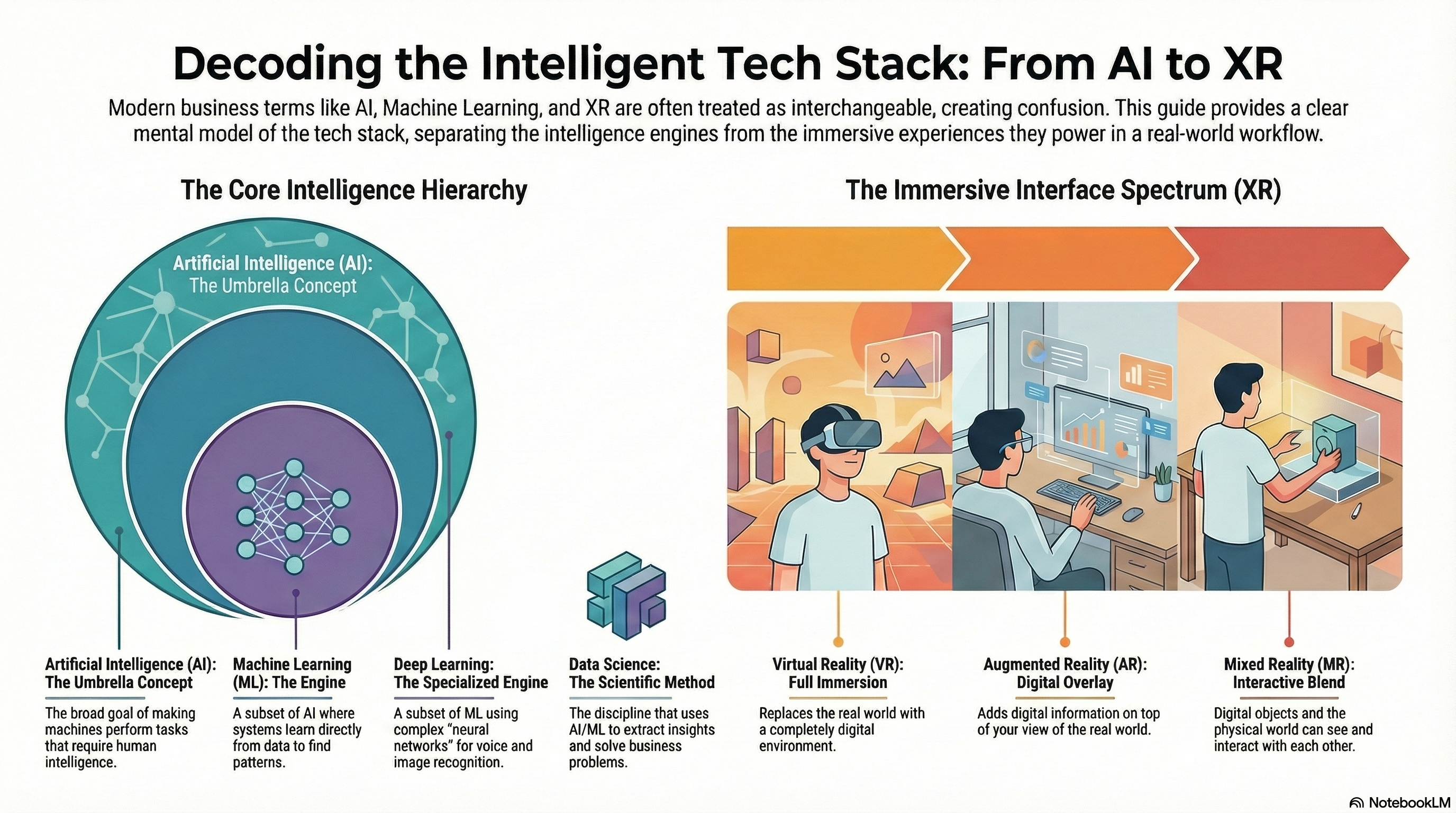

Decoding the intelligent tech stack. In modern business strategy, terms like “AI,” “Machine Learning,” and “XR” are often treated as interchangeable buzzwords. They are tossed into a tech salad during quarterly reviews, creating a foggy understanding of what the technology actually does.

For a practitioner or a leader, this ambiguity is a risk. You cannot build a strategy if you cannot distinguish between the science (Data Science), the tool (AI/ML), and the interface (XR).

This guide functions as an operational glossary. We aren’t looking at textbook definitions that gather dust; we are defining these concepts by how they function in a real-world workflow. The goal is to provide a clear mental model of the hierarchy, separating the intelligence engines from the immersive experiences they power.

Part 1: The Core Intelligence (AI Hierarchy)

What is Artificial Intelligence (AI)?

At its most fundamental level, Artificial Intelligence (AI) is the broad umbrella term for any computer system capable of performing tasks that typically require human intelligence. These tasks include visual perception, speech recognition, decision-making, and translation.

It is critical to understand that AI is the goal, not the specific method. If a machine can plan, reason, or solve a problem, it falls under the AI umbrella, regardless of how simple the code underneath might be.

Mechanics in Action:

- Video Game NPCs: In many games, Non-Player Characters (NPCs) use “AI” to decide whether to attack or hide. Often, this isn’t complex learning; it is a rigid decision tree (e.g., If player is < 10 meters away, then attack). It mimics intelligence, so it counts as AI.

- Smart Thermostats: Devices like Nest don’t just record temperature; they learn schedules. If you manually lower the heat every night at 10 PM for a week, the system recognizes the pattern and automates it.

Types of AI: The Capability Spectrum

To understand where the market is versus where science fiction says we are, we must categorize AI by capability.

1. Narrow AI (ANI)

Artificial Narrow Intelligence (ANI) is AI designed to perform a single, specific task extremely well.

- The Reality Check: 100% of the AI we use today from ChatGPT to autonomous vehicles is Narrow AI. A chess-playing AI can beat a Grandmaster, but it cannot boil an egg or write a poem. It is brilliant in its lane and incompetent everywhere else.

- Example: Email Spam Filters. These systems analyze metadata and keywords to flag junk mail with high accuracy. However, that same algorithm cannot read the email to summarize its sentiment; it only knows spam or not spam.

Read also: differences between narrow AI, general AI, and super AI

2. General AI (AGI)

Artificial General Intelligence (AGI) refers to a hypothetical machine possessing human-level cognitive abilities.

- The Context: The defining characteristic of AGI would be transfer learning the ability to learn a skill in one domain (like driving) and apply that logic to a new, unrelated domain (like navigating a warehouse) without being reprogrammed.

- Current Status: This does not exist yet. While Large Language Models (LLMs) mimic general conversation, they lack genuine reasoning or understanding of the physical world.

3. Super AI (ASI)

Artificial Super Intelligence (ASI) is the theoretical point where machine intelligence surpasses the brightest human minds in every field, from creativity to general wisdom.

- The Context: This is the realm of the singularity. It assumes that once an AI is smart enough to write its own code, it will improve itself recursively at an exponential rate.

- Current Status: Purely theoretical.

Part 2: The Engine Room (How AI Works)

If AI is the car, Machine Learning is the engine that makes it move.

What is Machine Learning (ML)?

Machine Learning (ML) is a subset of AI. It refers to the specific practice of using algorithms that improve automatically through experience (data).

In traditional programming, a human writes explicit rules (“If X happens, do Y”). In Machine Learning, the human provides the goal and the data, and the machine figures out the rules itself by identifying patterns.

Mechanics in Action:

- Spotify Recommendation Engine: The system doesn’t know you like rock music because a human tagged you. It uses collaborative filtering. It looks at millions of other users who listened to the same songs you did, sees what else they listened to, and predicts you will like those tracks too.

- Credit Card Fraud Detection: Instead of programming infinite rules about what fraud looks like, banks feed an ML algorithm millions of past transactions (both legitimate and fraudulent). The system learns the subtle mathematical texture of a fraudulent transaction (e.g., specific timing, location jumps, or spending velocity) to flag anomalies in real-time.

Read also: AI, machine learning, and data science differences

What is Deep Learning?

Deep Learning is a specialized, more complex subset of Machine Learning. It uses Artificial Neural Networks layered algorithmic structures inspired by the human brain to solve complex problems.

Standard ML requires a human to tell the computer what features to look for (e.g., look for round shapes to find a ball). Deep Learning figures out the features itself. It is particularly dominant in processing unstructured data like images, audio, and video.

Mechanics in Action:

- Voice Assistants (Siri/Alexa): Deep learning models analyze the raw sound waves of your voice. They break the audio down into phonemes (sounds), assemble them into words, and infer meaning using Natural Language Processing (NLP).

- Generative Art (Midjourney/DALL-E): These systems analyze billions of image-text pairs. They learn the pixel-level relationship between the word sunset and the colors orange and purple, allowing them to generate new images from scratch based on text prompts.

Read also: deep learning vs machine learning

What is Data Science?

Data Science is the interdisciplinary field that extracts value and insight from data.

While AI and ML are tools used by data scientists, Data Science also encompasses statistics, data cleaning, data visualization, and business domain expertise. Think of Data Science as the scientific method applied to business problems.

Mechanics in Action:

- Predictive Maintenance: A factory uses data science to analyze vibration logs from conveyor belts. By applying statistical models, they can predict that a bearing will fail in 48 hours, allowing them to replace it during a lunch break rather than shutting down the line after a crash.

- Logistics Optimization: UPS uses data science to optimize delivery routes. This involves not just ML for traffic prediction, but also statistical analysis of package volume, fuel costs, and driver shifts to minimize left-hand turns (which waste fuel).

Summary: The Distinction

- Data Science produces insights (humans make better decisions).

- Machine Learning produces predictions (computers make better guesses).

- Artificial Intelligence produces actions (systems mimic human behavior).

Read also: AI vs machine learning vs data science explained

Part 3: Perception and Immersion (Vision & XR)

While AI processes information, Extended Reality (XR) changes how humans perceive and interact with that information.

What is Computer Vision?

Computer Vision is the field of AI that enables computers to see and interpret visual information from the real world. It turns pixels into metadata.

Mechanics in Action:

- Medical Imaging: AI models scan X-rays and MRIs to detect anomalies like tumors. The system doesn’t just see the image; it classifies pixel clusters that deviate from healthy tissue patterns.

- Automated Tolls: Cameras capture a car moving at 60mph. Computer vision isolates the license plate, adjusts for lighting and blur, and converts the image of the numbers into text data for billing.

Read also: computer vision and machine learning differences

What is Extended Reality (XR)?

Extended Reality (XR) is the umbrella term for all immersive technologies. It includes Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). These technologies merge the physical and virtual worlds to varying degrees.

1. Virtual Reality (VR)

Virtual Reality is a fully digital environment. When you put on a VR headset, the real world is completely blocked out and replaced by a computer-generated simulation.

- Mechanics in Action: Employee Safety Training. Walmart uses VR to train employees on how to handle Black Friday crowds or hazardous spills. The employee feels immersed in the chaos without the actual physical risk.

2. Augmented Reality (AR)

Augmented Reality overlays digital elements onto the real world, usually viewed through a smartphone screen or smart glasses. The digital objects do not necessarily interact with the physical room; they just float on top of the camera feed.

- Mechanics in Action: IKEA Place App. You hold up your phone, and the app superimposes a 3D model of a sofa onto your living room floor. You can see your real rug and walls, but the sofa is digital.

3. Mixed Reality (MR)

Mixed Reality is the hybrid where digital and physical objects coexist and interact in real-time. Unlike AR, where an object might float ghost-like over a table, in MR, the digital object knows the table is there and will sit on it. If you look under the table, the object is occluded (blocked) by the physical wood.

- Mechanics in Action: Microsoft HoloLens in Engineering. An engineer can view a holographic jet engine hovering in the middle of the room. They can reach out and turn a digital dial, and the hologram responds. The hologram stays anchored to a specific physical point in the room, even if the engineer walks away and comes back.

Read also: key features of mixed reality technology

Read also: how mixed reality works

Part 4: The Convergence: Where AI Meets XR

The most powerful modern applications occur where these fields overlap. XR provides the interface, but AI provides the understanding of the world required to make that interface work.

Spatial Computing

For Mixed Reality to work, the device must understand the geometry of the room. It uses Computer Vision (AI) to map the walls, floor, and furniture in milliseconds. Without AI, the digital ball you throw in a VR game wouldn’t bounce off the real wall it would just fly through it.

Hand Tracking

In modern VR headsets (like the Meta Quest), you don’t always need controllers. The cameras on the headset use Deep Learning to track the skeletal position of your fingers in real-time, translating your physical hand movements into digital interactions instantly.

Read also: why AI and virtual reality work well together

Conclusion

Understanding these definitions is about more than vocabulary; it is about understanding the architecture of modern solutions.

- Data Science helps you ask the right questions.

- Machine Learning processes the data to answer them.

- AI automates the action based on that answer.

- XR allows us to step inside that data and interact with it intuitively.

As these technologies continue to converge, the distinction between digital and physical operations will continue to blur. The winners will be those who understand how to assemble these distinct tools into a cohesive strategy.

Recommended Next Steps

- Explore Generative AI: Dive deeper into how LLMs (Large Language Models) are changing the narrow ai landscape.

- Data Governance: Learn why clean data is the prerequisite for any Machine Learning project.

- Industrial Metaverse: Investigate how MR and Digital Twins are being applied in manufacturing and logistics.

Admin

My name is Kaleem and i am a computer science graduate with 5+ years of experience in AI tools, tech, and web innovation. I founded ValleyAI.net to simplify AI, internet, and computer topics also focus on building useful utility tools. My clear, hands-on content is trusted by 5K+ monthly readers worldwide.